Member-only story

Pandas is good for dealing with tabular data, and the most common file type is a csv file. However, when the data size goes up, dealing with csv file will be much slower.

Parquet is a column-oriented file format. It is efficient for data compression and decompression. I will leave out the details and dive straight into the implementation.

First you need to install pyarrow for the pandas to work with parquet format:

pip install pyarrowLet’s import some libraries:

import pandas as pd

import numpy as np

import osNext create a fake dataset with 10 millions rows:

def fake_dataset(n_rows=10_000_000):

sensor_1 = np.random.rand(n_rows)

sensor_2 = np.random.rand(n_rows)

sensor_3 = np.random.rand(n_rows)

machine_status = np.random.randint(2, size=n_rows)

return pd.DataFrame(

{

"sensor_1": sensor_1,

"sensor_2": sensor_2,

"sensor_3": sensor_3,

"machine_status": machine_status,

}

)

ds = fake_dataset()

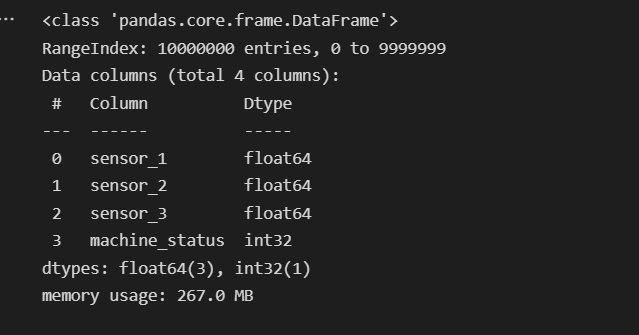

ds.info()The following output is shown:

Next, write the dataframe into csv file and parquet file to compare the time taken for the operations: